This is a guest post, offered by a colleague of mine: Russ Whitear.

Russ is the UCS Director guru in our team and, when I saw an internal email where he explained how to use the UCS Director API from an external client, I asked his permission to publish it.

I believe it will be useful for many customers and partners to integrate UCSD in a broader ecosystem.

This short post explains how to invoke UCS Director workflows via the northbound REST API. Authentication and role is controlled by the use of a secure token. Each user account within UCS Director has a unique API token, which can accessed via the GUI like so:

Firstly, from within the UCS Director GUI, click the current username at the top right of the screen. Like so:

User Information will then be presented. Select the ‘Advanced’ tab in order to reveal the API Access token for that user account.

Once retrieved, this token needs to be added as an HTTP header for all REST requests to UCS Director. The HTTP header name must be X-Cloupia-Request-Key.

X-Cloupia-Request-Key : E0BEA013C6D4418C9E8B03805156E8BB

Once this step is complete, the next requirement is to construct an appropriate URI for the HTTP request in order to invoke the required UCS Director workflow also supplying the required User Inputs (Inputs that would ordinarily be entered by the end user when executing the workflow manually).

UCS Director has two versions of northbound API. Version 1 uses HTTP GET requests with a JSON (Java Standard Object Notation) formatted URI. Version 2 uses HTTP POST with XML (eXtensible Markup Language) bodytext.

Workflow invokation for UCS Director uses Version 1 of the API (JSON). A typical request URL would look similar to this:

http://<UCSD_IP>/app/api/rest?formatType=json

&opName=userAPISubmitWorkflowServiceRequest

&opData={SOME_JSON_DATA_HERE}

A very quick JSON refresher

JSON formatted data consists of either dictionaries or lists. Dictionaries consist of name/value pairs that are separated by a colon. Name/value pairs are separated by a comman and dictionaries are bounded by curly braces. For example:

{“animal”:”dog”, “mineral”:”rock”, “vegetable”:”carrot”}

Lists are used in instances where a single value is insufficient. Lists are comma separated and bounded by square braces. For example:

{“animals”:[“dog”,”cat”,”horse”]}

To ease readability, it is often worth temporarily expanding the structure to see what is going on.

{

“animals”:[

“dog”,

”cat”,

”horse”

]

}

Now things get interesting. It is possible (And common) for dictionaries to contain lists, and for those lists to contain dictionaries rather than just elements (dog, cat, horse etc…).

{ “all_things”:{

“animals”:[

“dog”,

”cat”,

”horse”

],

“minerals”:[

“Quartz”,

“Calcite”

],

“vegetable”:”carrot”

}

}

With an understanding of how JSON objects are structured, we can now look at the required formatting of the URI for UCS Director. When invoking a workflow via the REST API, UCS Director must be called with three parameters, param0, param1 and param2. ‘param0’ contains the name of the workflow to be invoked. The syntax of the workflow name must match EXACTLY the name of the actual workflow. ‘param1’ contains a dictionary, which itself contains a list of dictionaries detailing each user input and value that should be inserted for that user input (As though an end user had invoked the workflow via the GUI and had entered values manually.

The structure of the UCS Director JSON URI looks like so:

{

param0:"<WORKFLOW_NAME>",

param1:{

"list":[

{“name":"<INPUT_1>","value":"<INPUT_VALUE>"},

{"name":"<INPUT_2>","value":"<INPUT_VALUE"}

]

},

param2:-1

}

So, let’s see this in action. Take the following workflow, which happens to be named ‘Infoblox Register New Host’ and has the user inputs ‘Infoblox IP:’,’Infoblox Username:’,’Infoblox Password:’,’Hostname:’,’Domain:’ and ‘Network Range:’.

The correct JSON object (Shown here in pretty form) would look like so:

Note once more, that the syntax of the input names must match EXACTLY that of the actual workflow inputs.

After removing all of the readability formatting, the full URL required in order to invoke this workflow with the ‘user’ inputs as shown above would look like this:

Now that we have our URL and authentication token HTTP header, we can simply enter this information into a web based REST client (e.g. RESTclient for Firefox or Postman for Chrome) and execute the request. Like so:

If the request is successful, then UCS Director will respond with a “serviceError” of null (No error) and the serviceResult will contain the service request ID for the newly invoked workflow:

Progress of the workflow can either be monitored by other API requests or via the UCS Director GUI:

Service request logging can also be monitored via either further API calls or via the UCS Director GUI:

This concludes the example, that you could easily test on your own instance of UCS Director or, if you don't have one at hand, in a demo lab on dcloud.cisco.com.

It should be enough to demonstrate how simple is the integration of the automation engine provided by UCSD, if you want to execute its workflows from an external system: a front end portal, another orchestrator, your custom scripts.

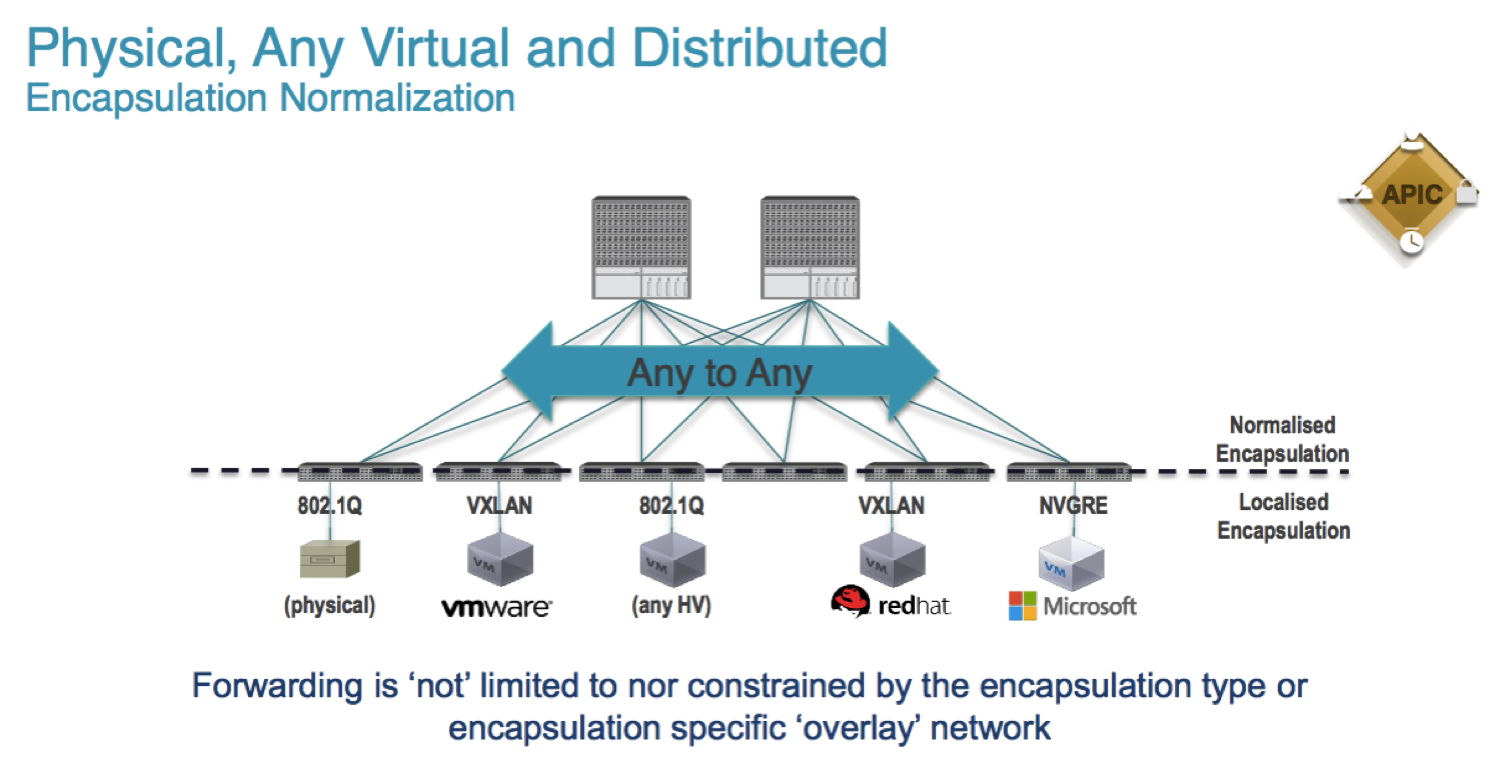

See also The Elastic Cloud project - Porting to UCSD for the deployment of a 3 tier application to 3 different hypervisors, using Openstack and ACI with Cisco UCS Director.